RESEARCH

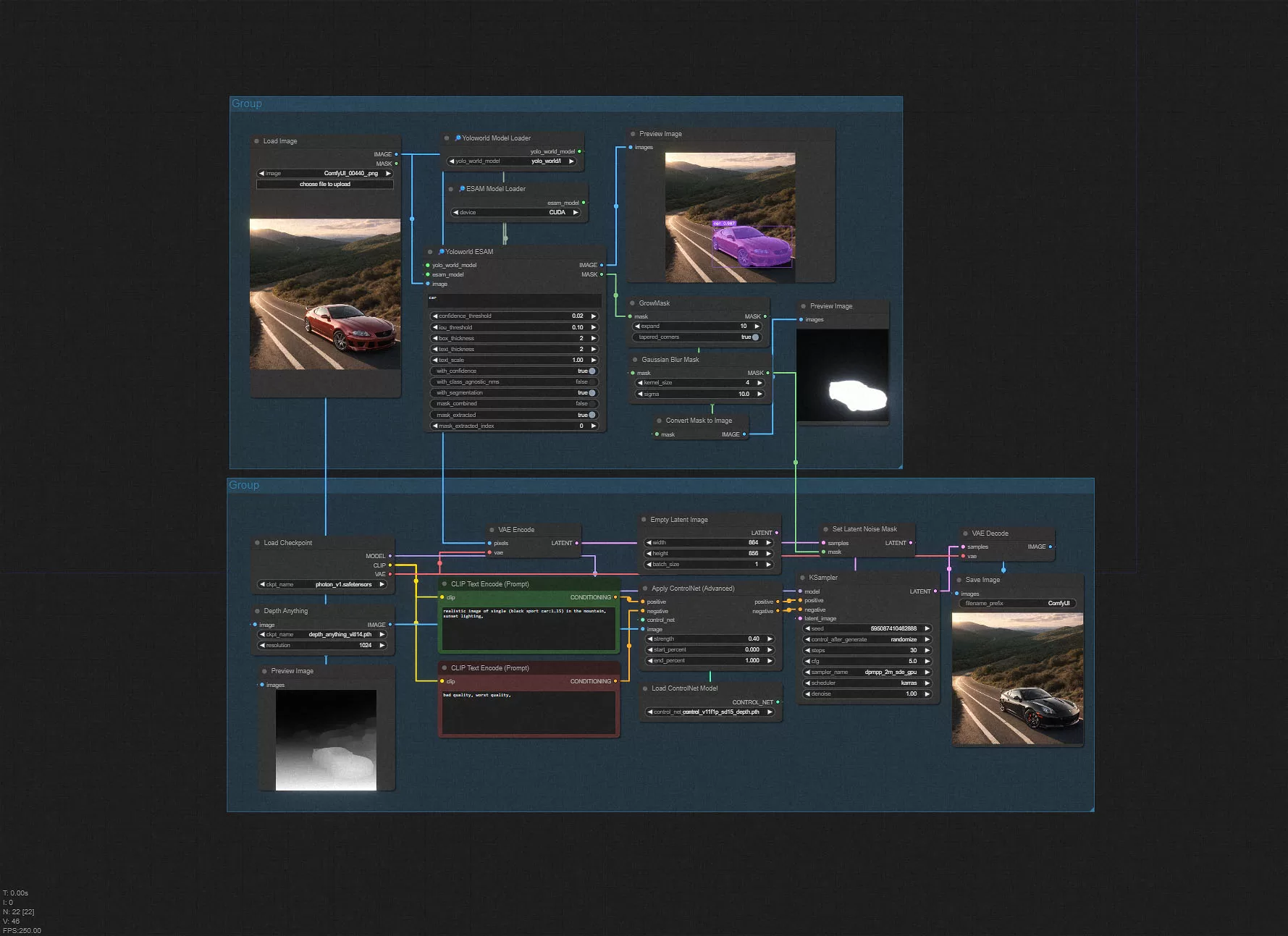

Computer Vision masking in ComfyUI

AI | 2024

This research investigates a workflow utilizing computer vision detection models within ComfyUI for efficient masking. The objective is to evaluate the extent to which this approach can be optimized and to identify scenarios where it offers a faster and more convenient alternative to traditional rotoscoping.

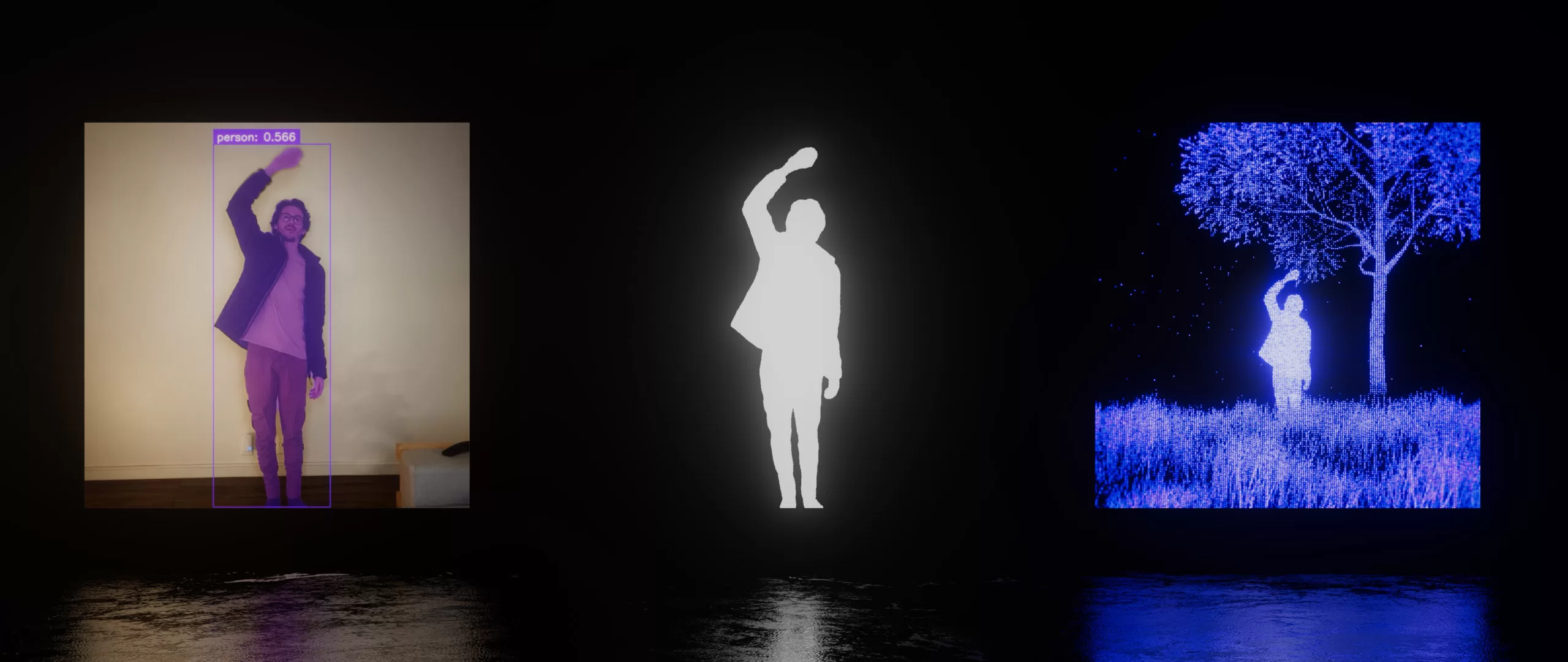

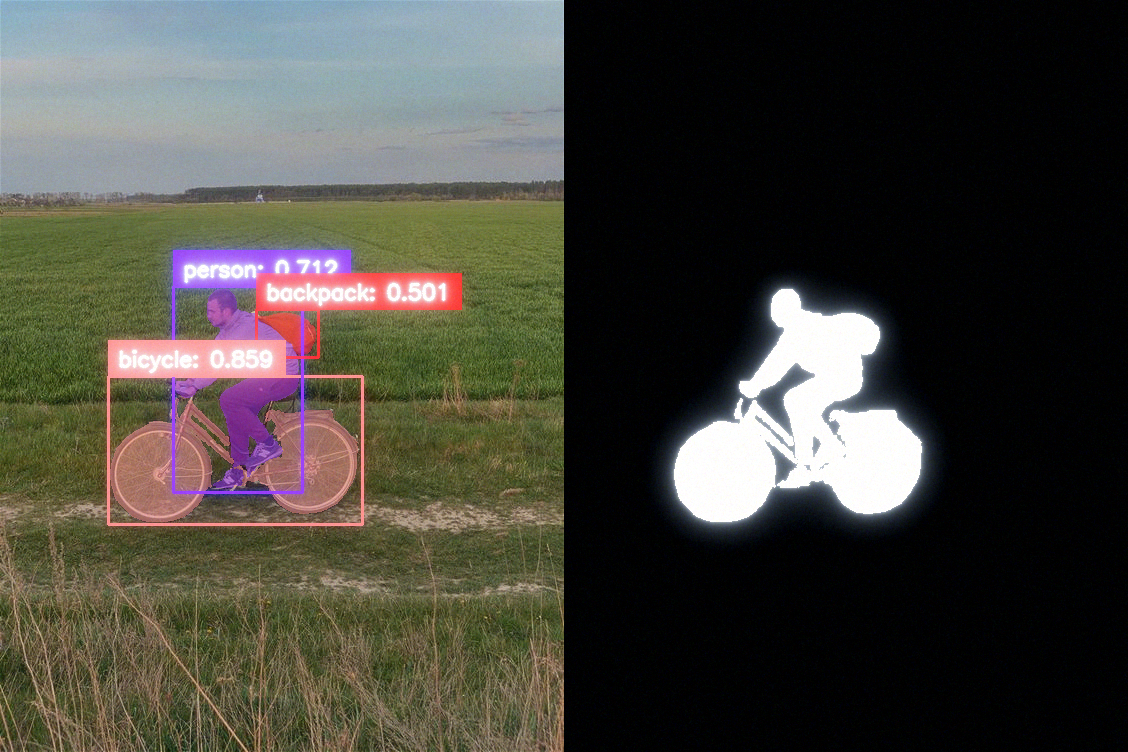

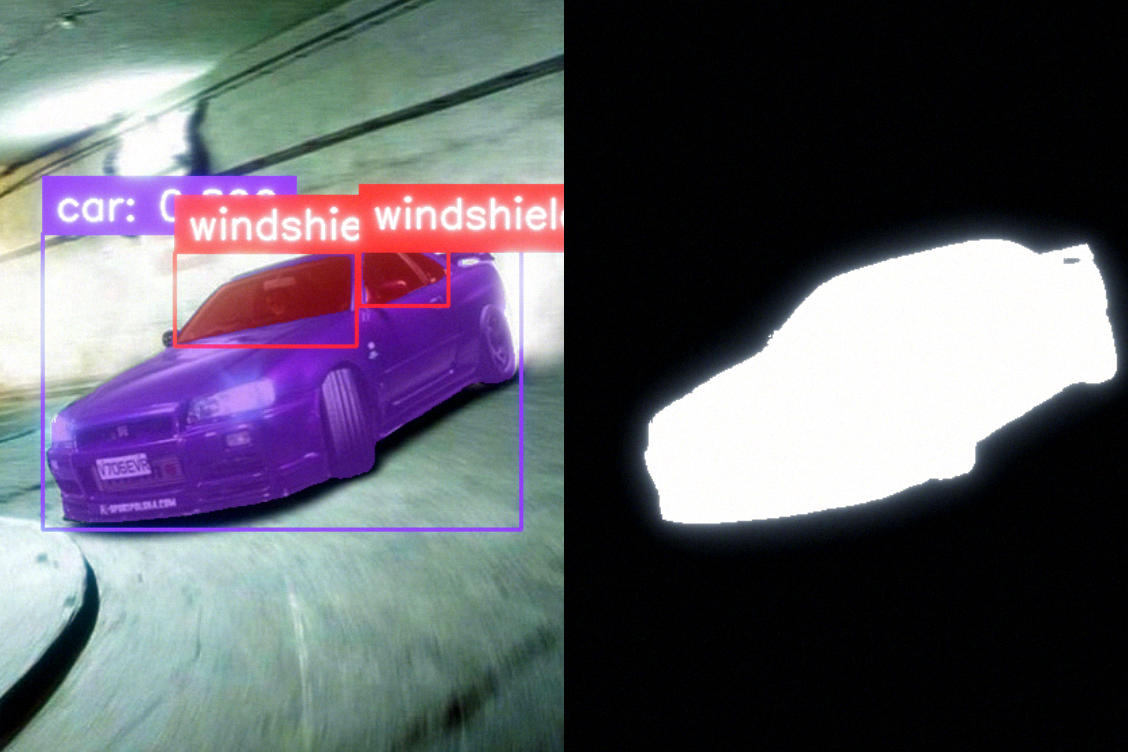

STATIC IMAGE DETECTION

Yoloworld models exhibit exceptionally fast processing times, capable of tagging entities in an image within approximately 0.2 to 0.3 seconds. However, the accuracy varies on a case-by-case basis. Currently, I’m not aware of method to increase accuracy by adjusting processing passes. Below are some examples.

PROMPT: bicycle, person, backpack,

PROMPT: people,

PROMPT: car, windshield

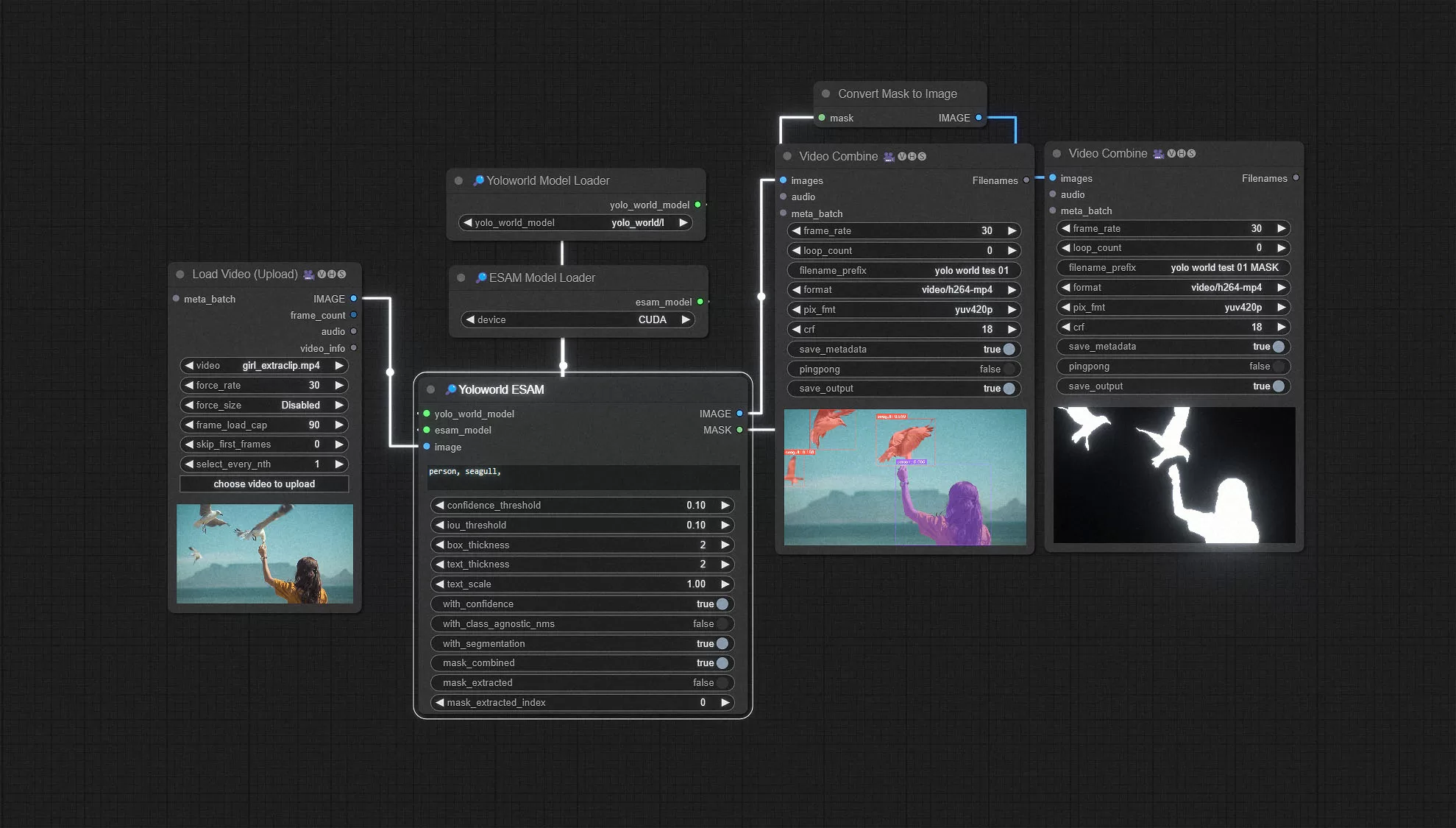

VIDEO DETECTION

By processing a video as a PNG sequence, I was able to apply the model to a video file. The detection remained consistent in videos with clear outlines and subtle movements. However, the detection accuracy diminishes in the presence of numerous overlapping entities or rapid, blurred motion.

The Yoloworld workflow is straightforward and quick to set up. For video processing, I recommend using the VHS Video Load and Video Combine nodes for small MP4 files. For longer videos, it is ideal to preprocess them into a full PNG sequence and batch process all images.

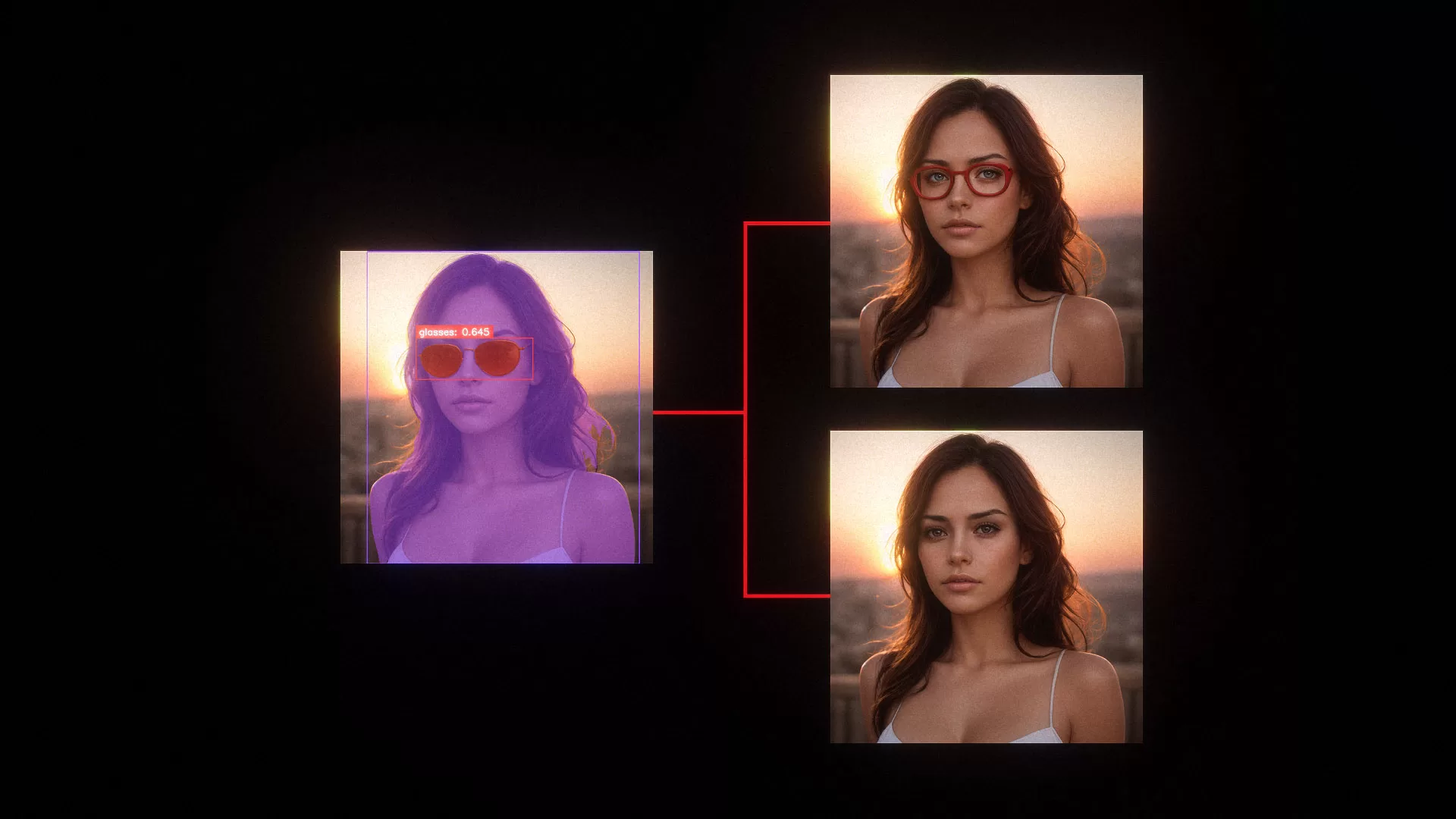

INPAINTING TEST

One of the objectives of integrating computer vision within ComfyUI is to leverage this capability with Generative AI models. In the examples below, Yoloworld is used to automatically detect specific entities, and Stable Diffusion is then employed to inpaint modifications to the image.

ADDING TO MY PERSONAL WORKFLOW

A broader application of this pipeline involves using the output as a mask within other programs. I wanted to integrate this process into my art workflows, and for the use cases demonstrated below, it performs exceptionally well.

CONCLUSION

In conclusion, this workflow has proven to be efficient and fast for masking one or multiple entities in a video, provided there is a clear distinction from the background and minimal movement or motion blur. Additionally, this method can be extremely useful for automated inpainting pipelines within ComfyUI.

hello@iamfesq.com